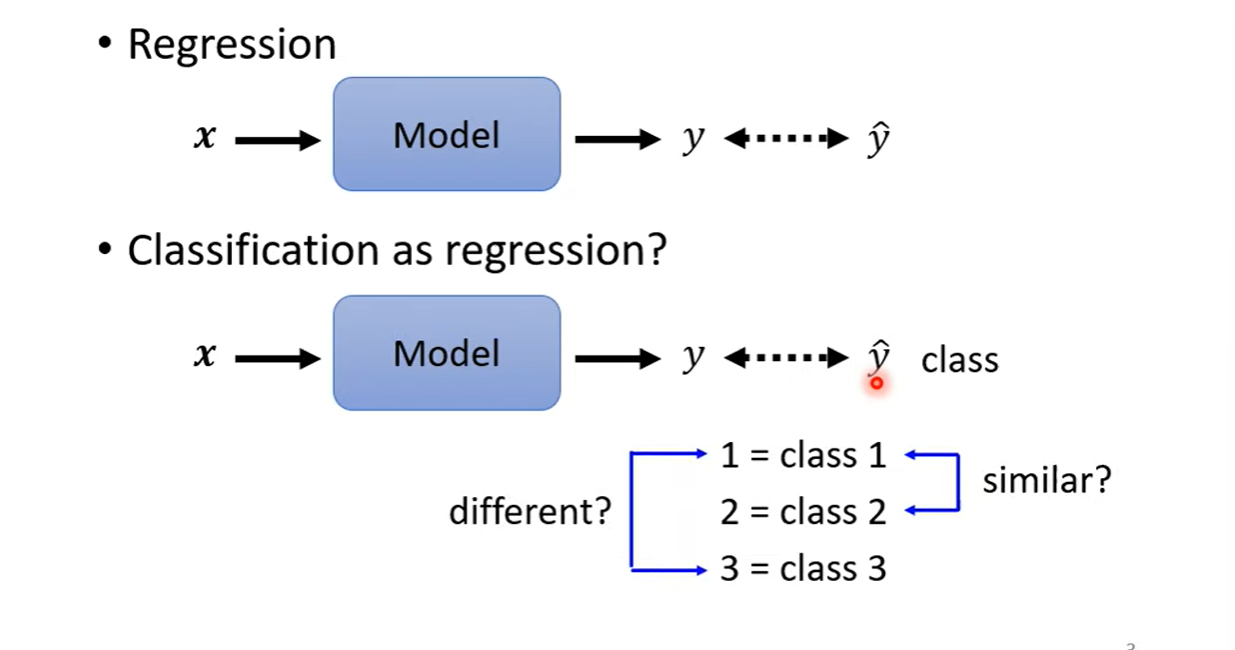

Classification as Regression?

Class 1 and class 2 are more similar, class1 and class3 are not so similar.

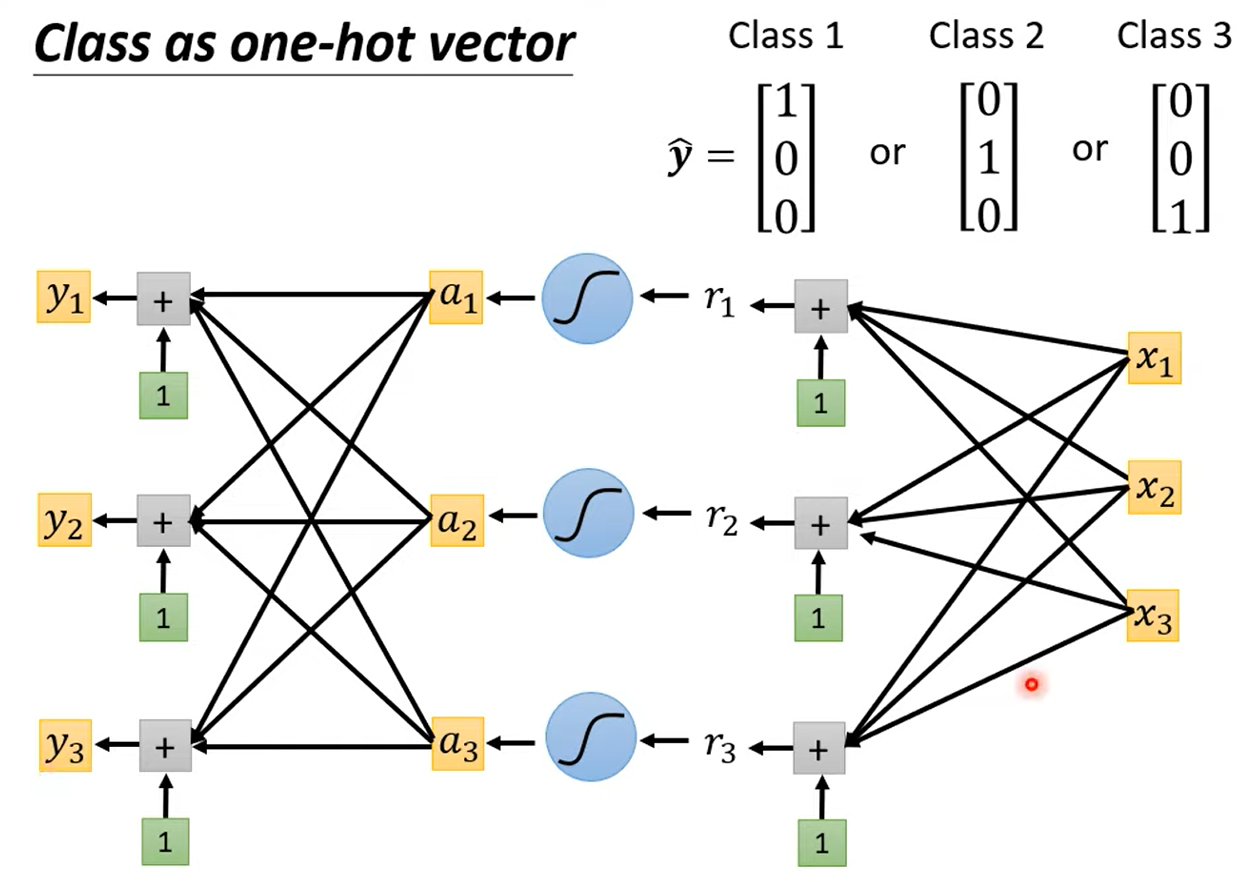

1 Class as one-hot vector

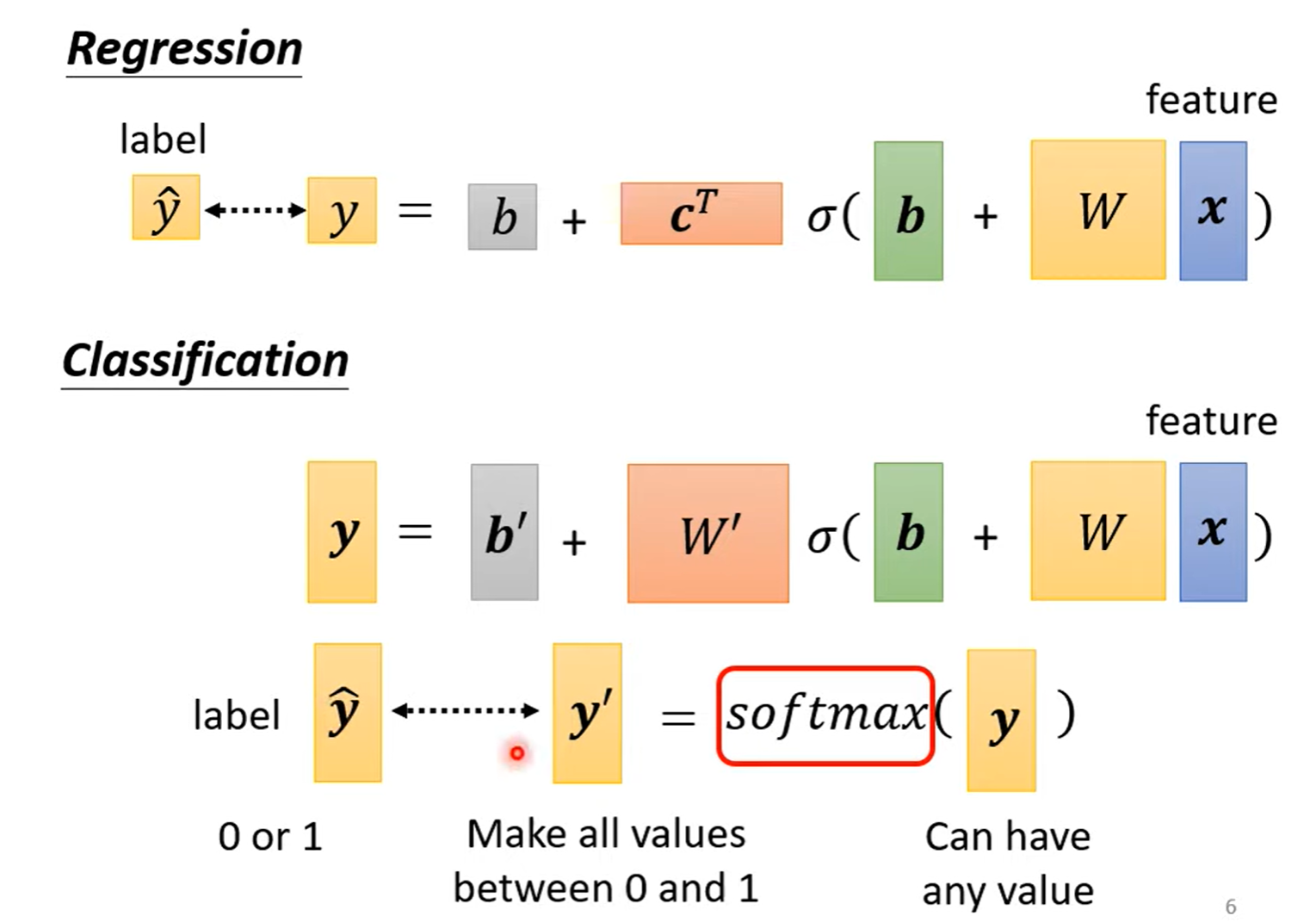

2 Regression v.s. Classification

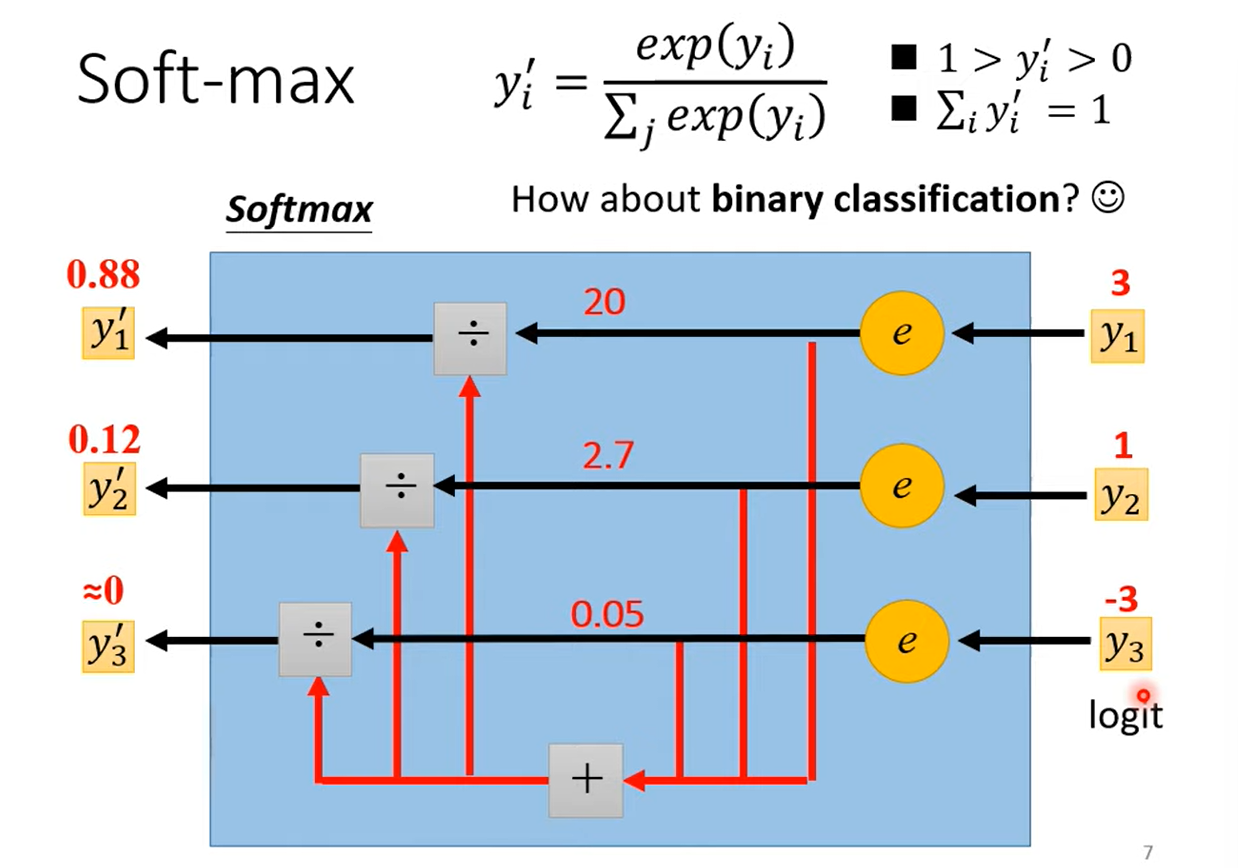

3 Soft-max

More commonly, it is always use sigmoid when binary classification (only 2 class), but sigmoid and soft-max are equivalent.

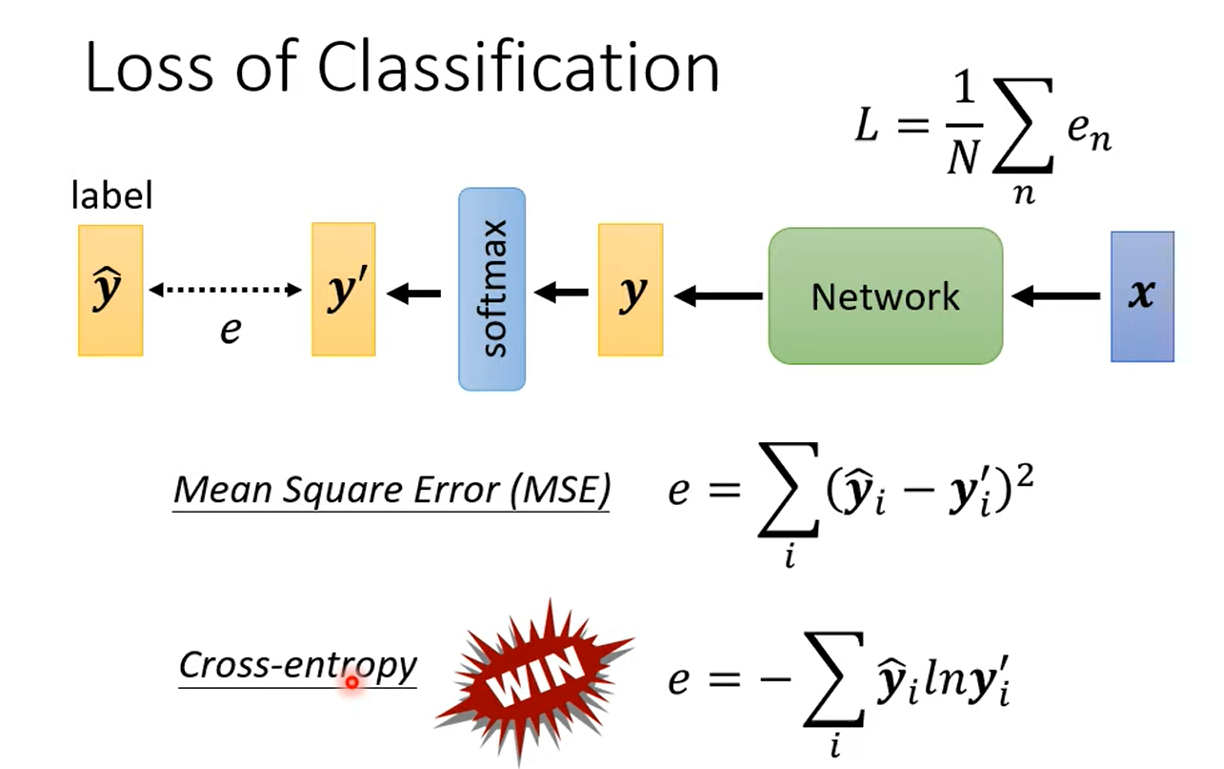

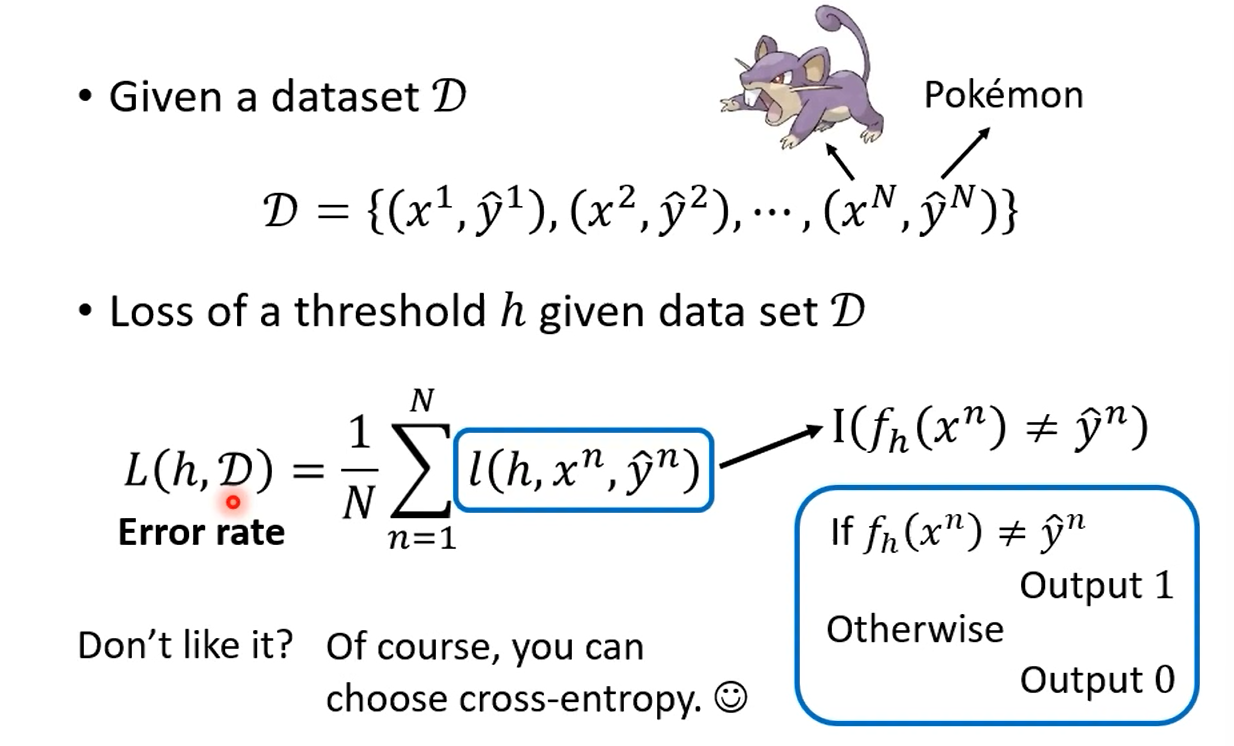

4 Loss of Classification

Minimizing cross-entropy is equivalent to maximizing likelihood.

In Pytorch, when you call cross-entropy, it will include soft-max.

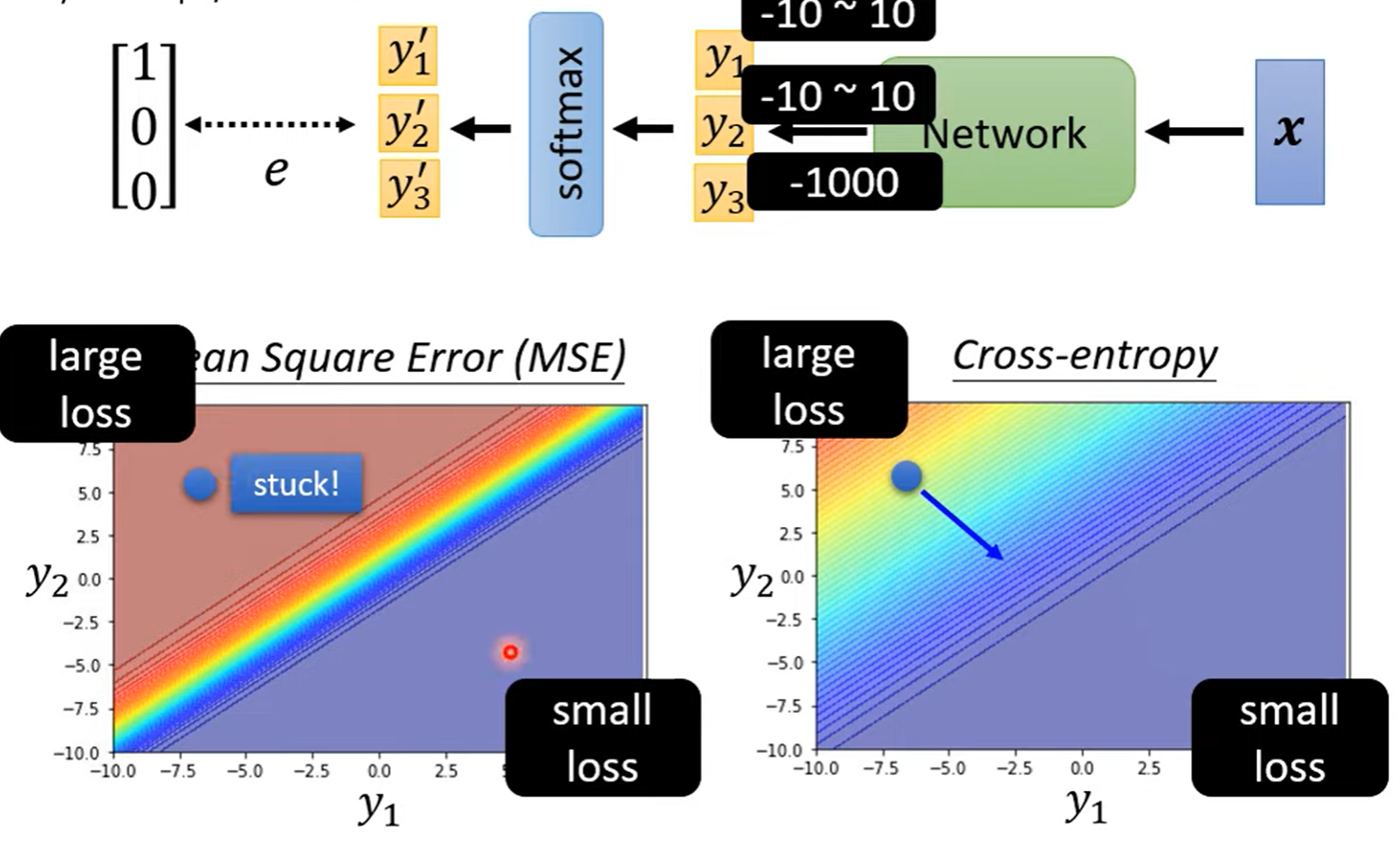

Changing the loss function can change the difficulty of optimization.

5 Classification

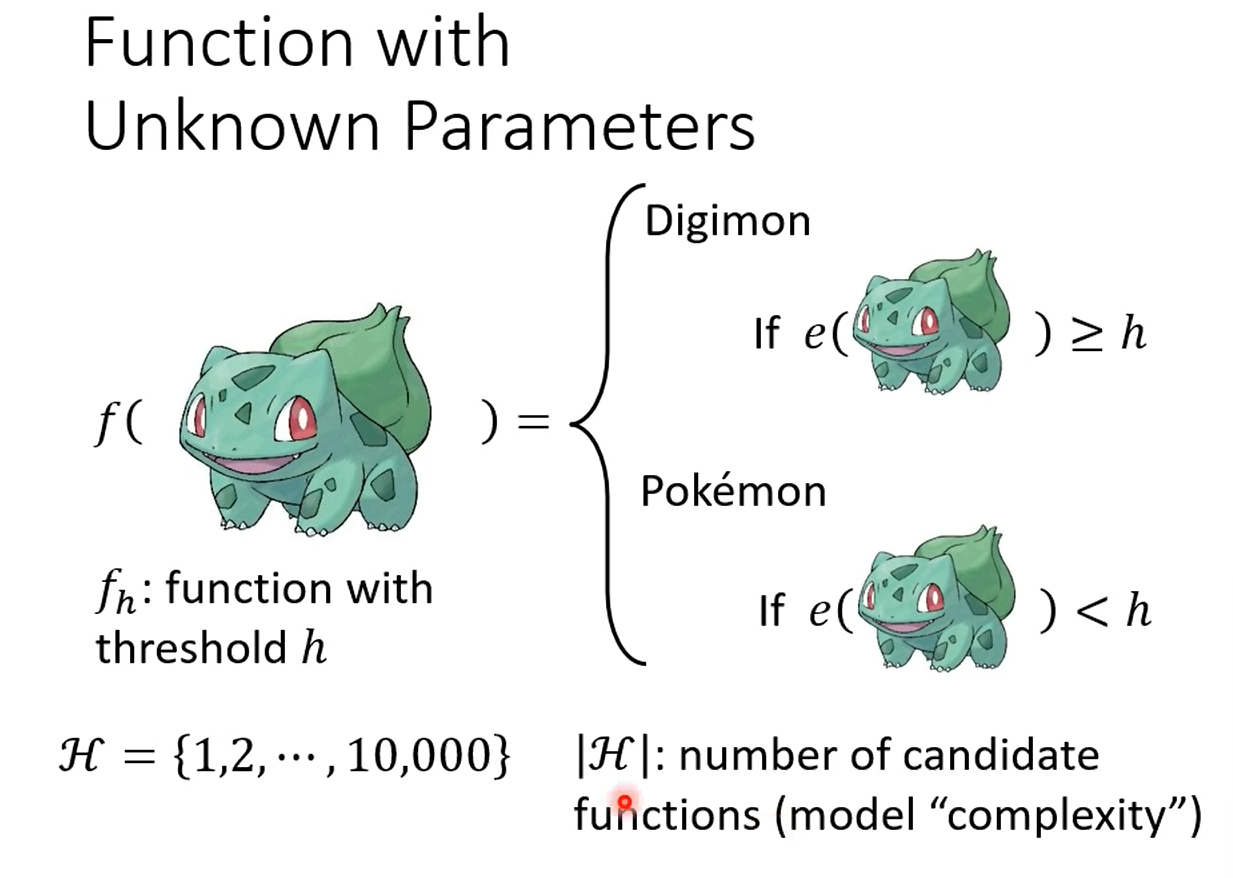

5.1 Function with Unknow Parameters

5.2 Loss of a function

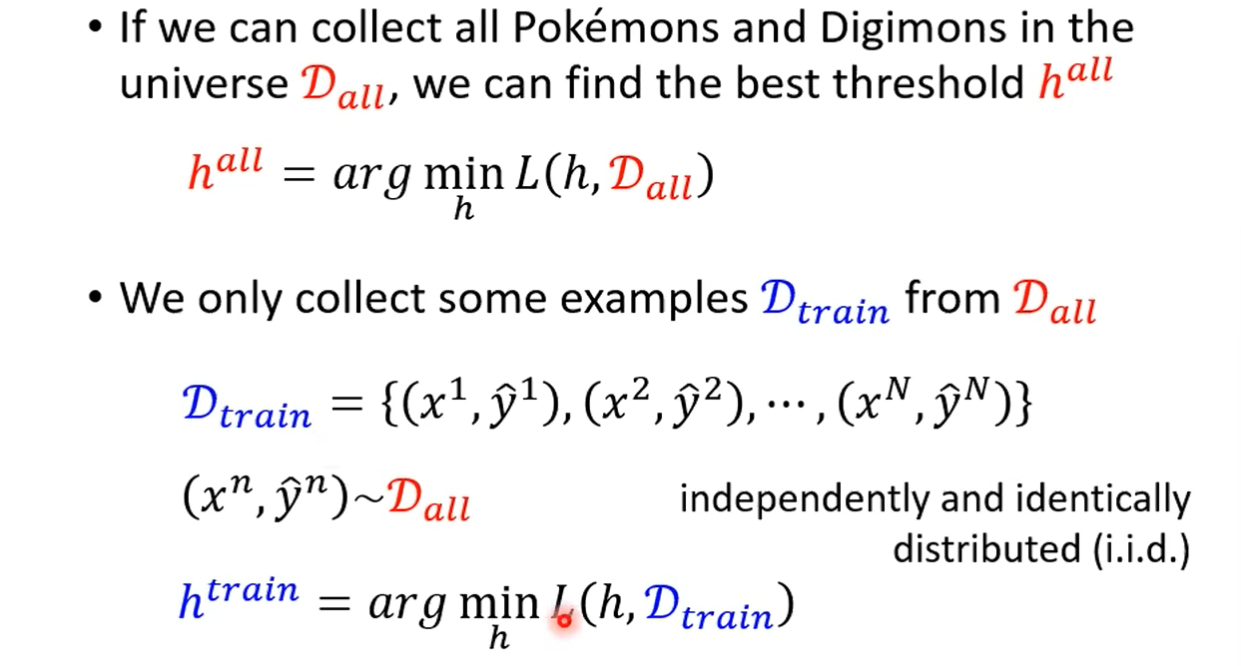

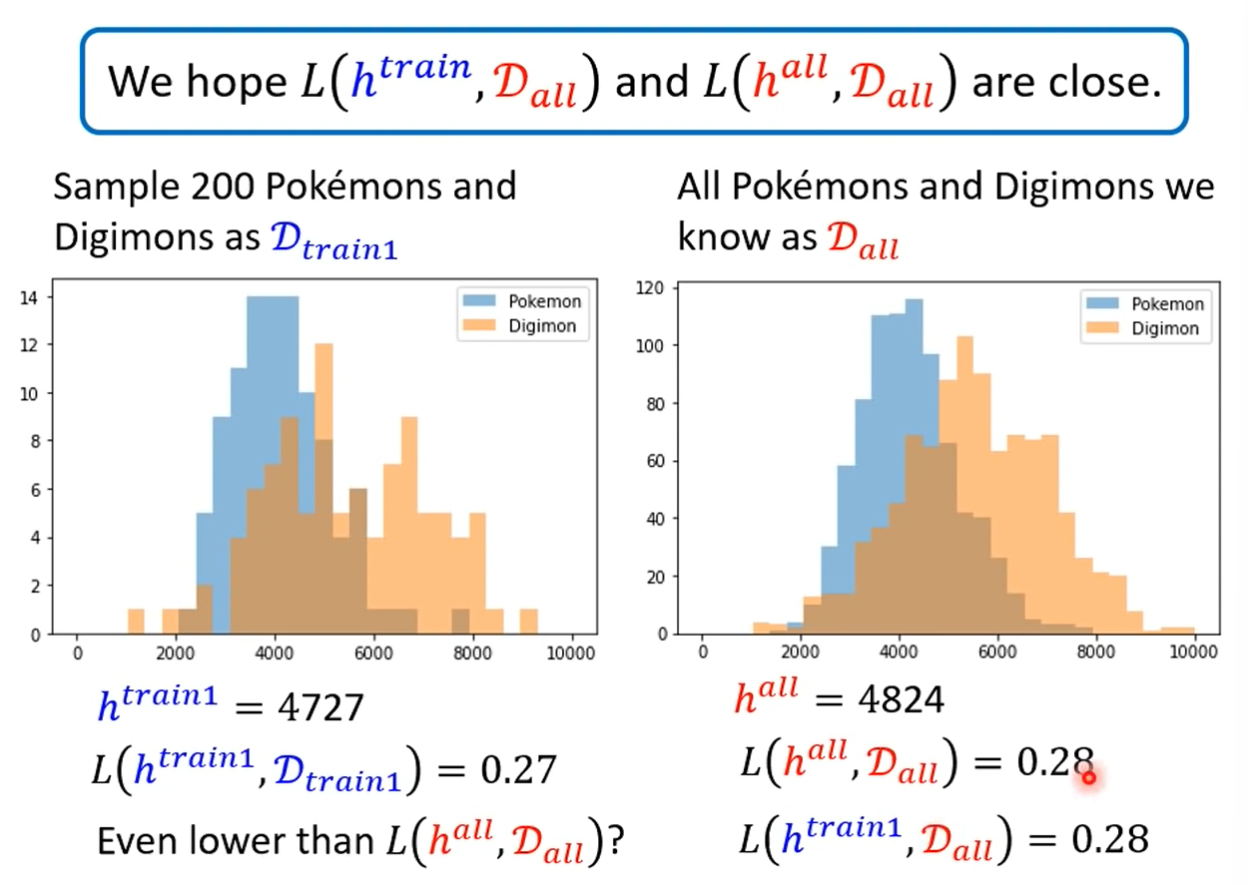

5.3 Training Examples

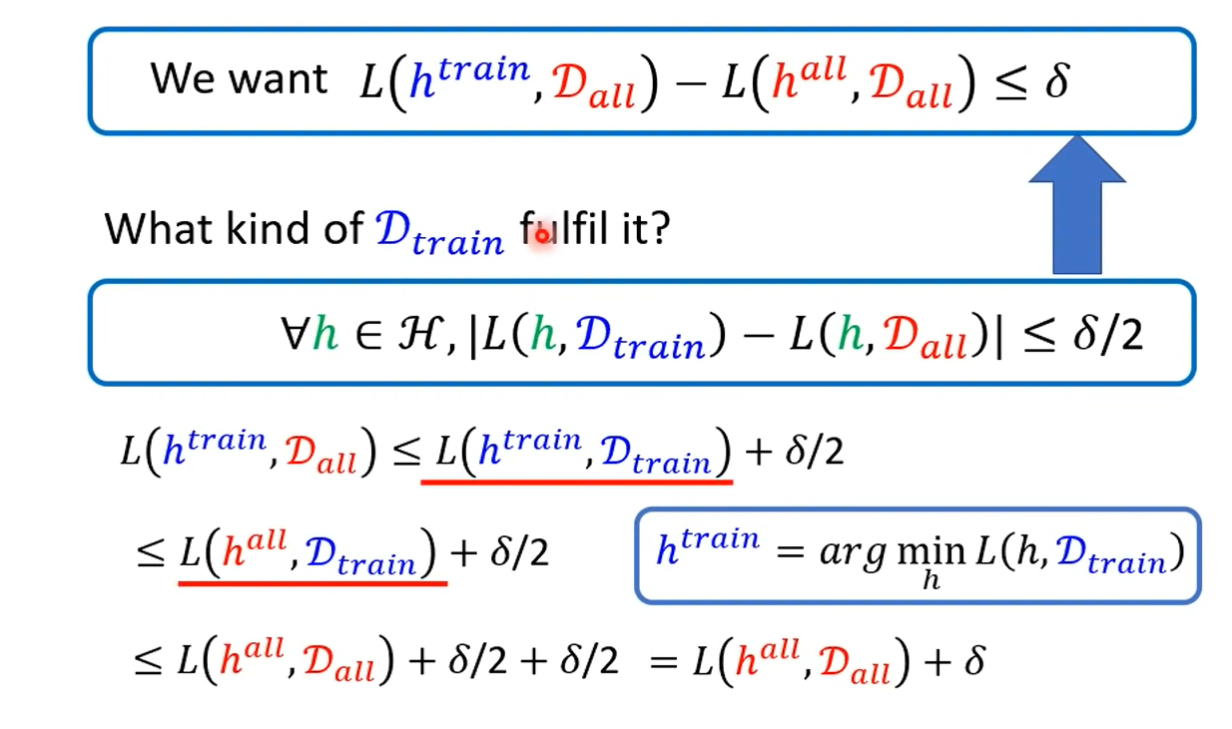

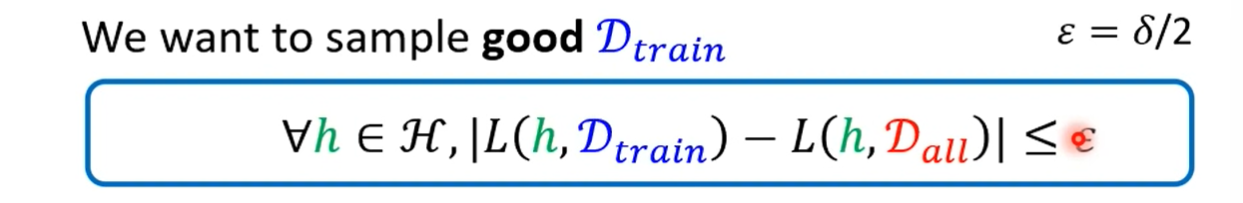

What do we want?

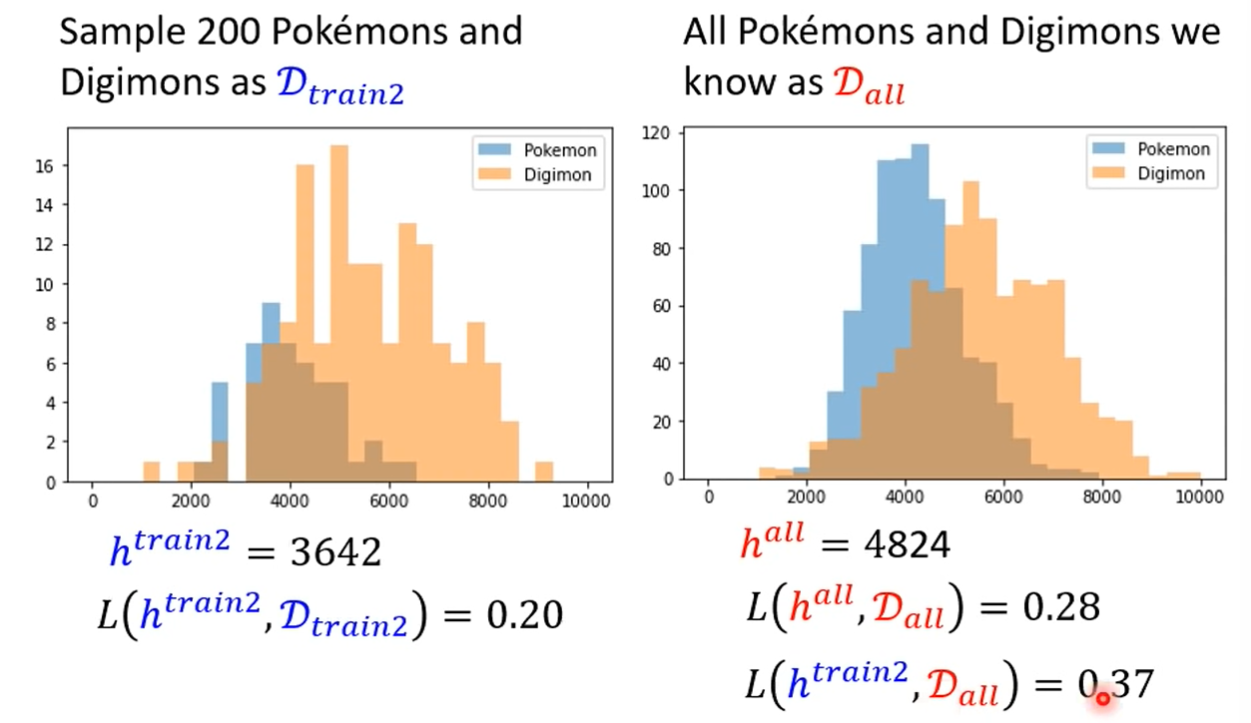

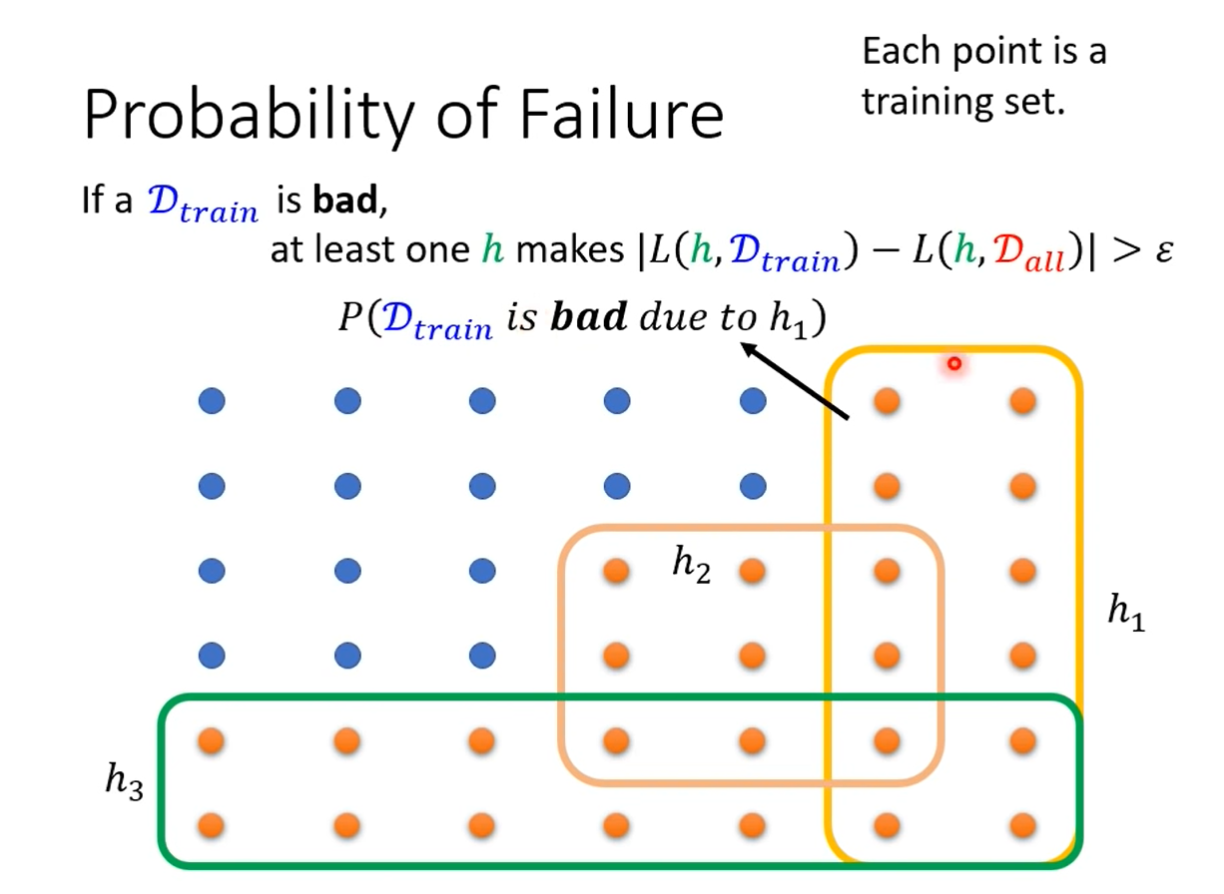

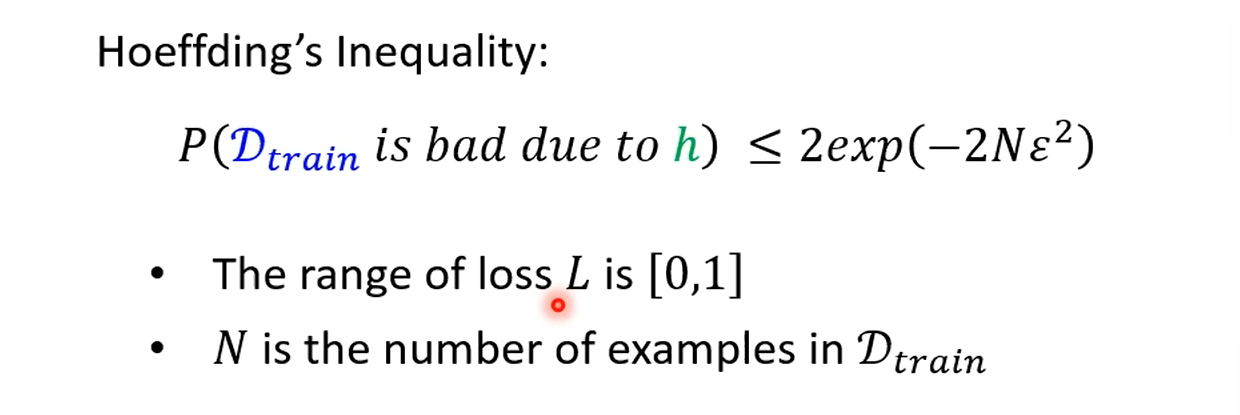

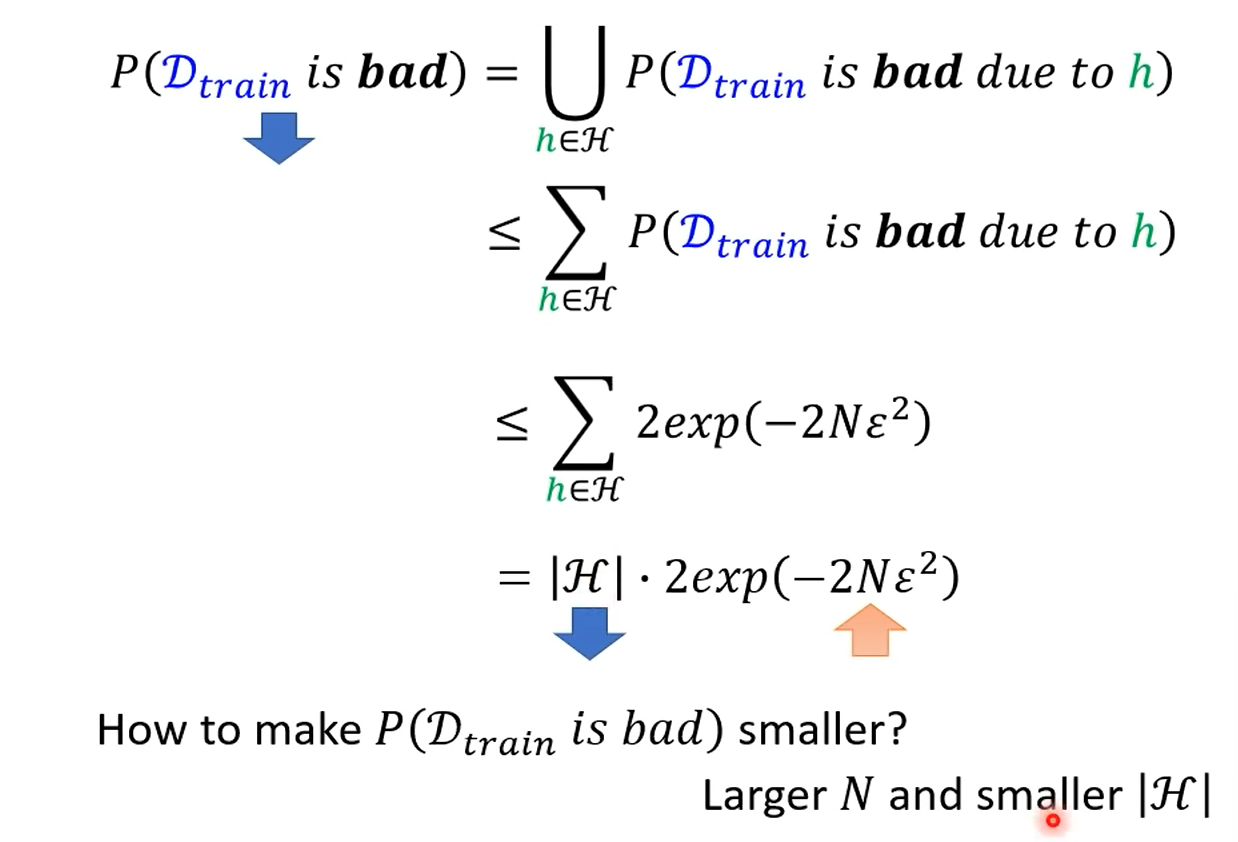

Probability of Failure

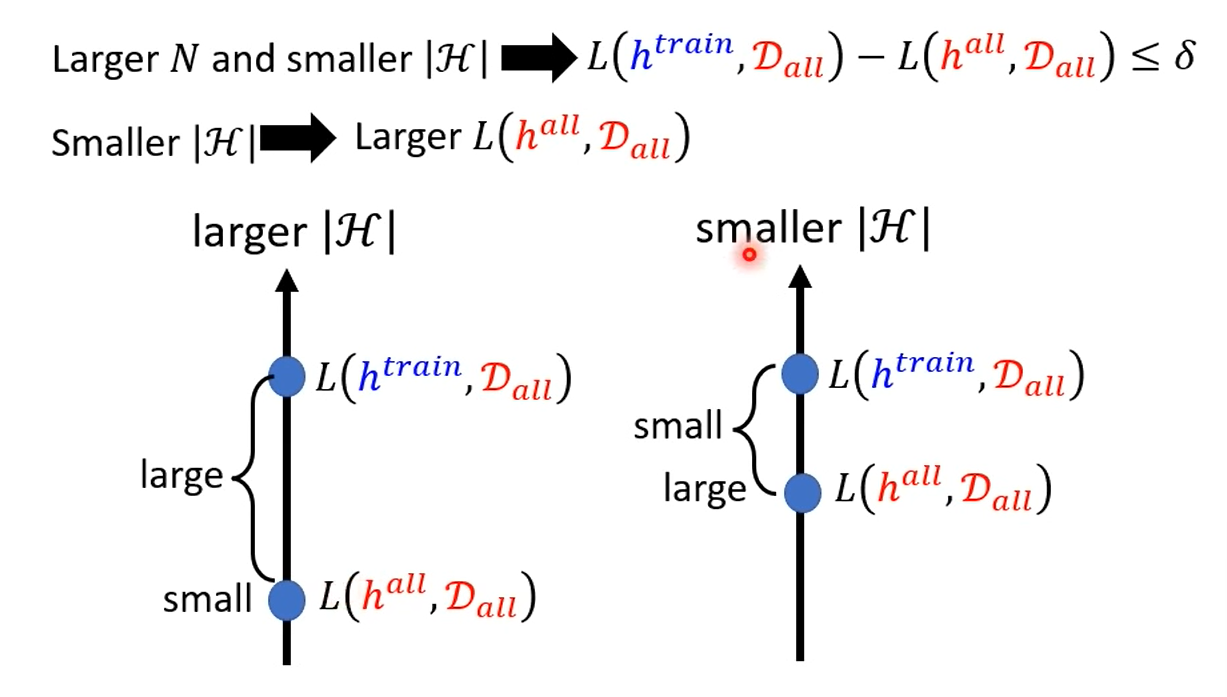

Tradeoff of Model Complexity -> Deep Learning