1 Definition: Linear Regression

2 variable regression - how a response variable changes the predictor (explanatory) variable changes.

Multiple regression - how a response variable changes as the predictor (explanatory) variables , , … change

MATHJAX-SSR-159

Single Variable Polynomial Regression: First degree to Fifth Degree

The concept can be extended to polynomial regression

2 Regression Strategy: Ordinary Least Squares (OLS)

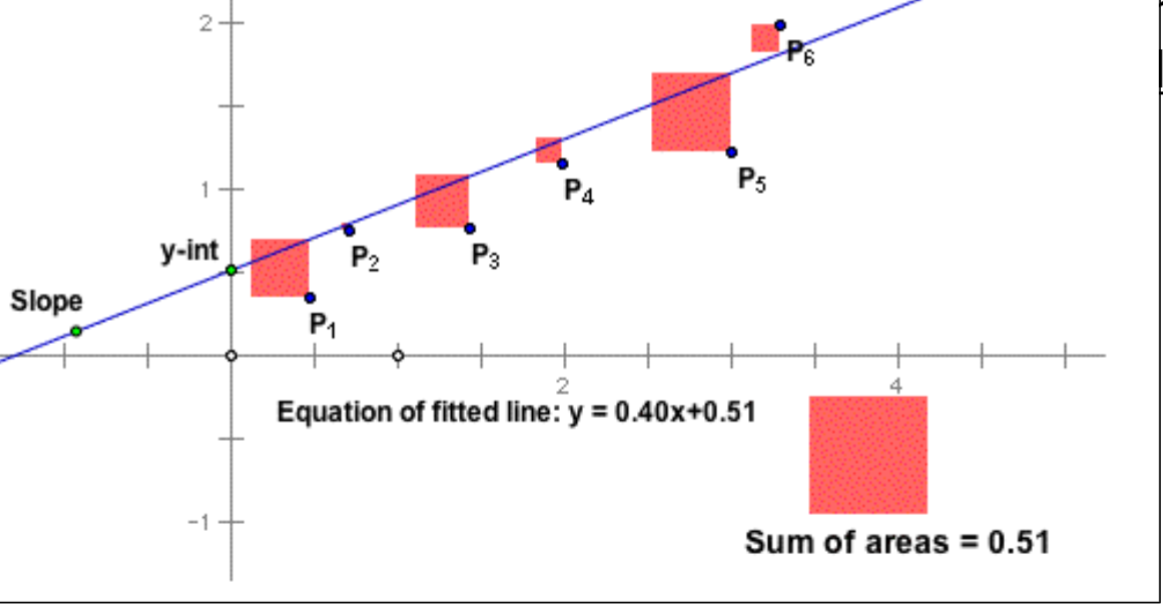

The least-squares regression line of y and x is the line that makes the sum of the squares of the vertical distances of the data points from the line as small as possible.

- Residual = Observed value – Computed Value

Suppose regression equation is

- is the explanatory variable, is the predictor variable

- is the slope of the line, is the intercept

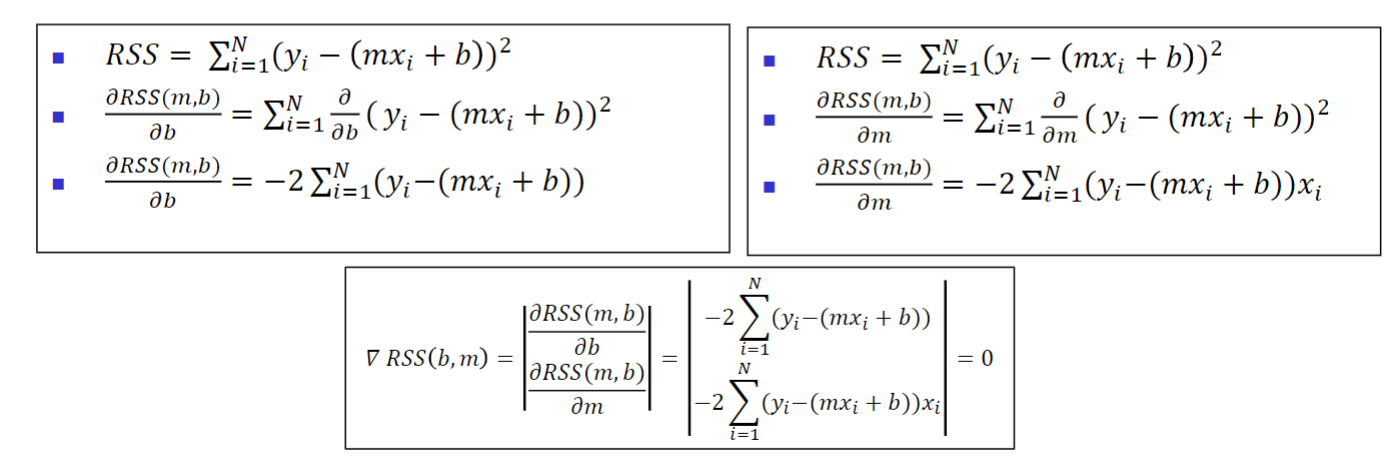

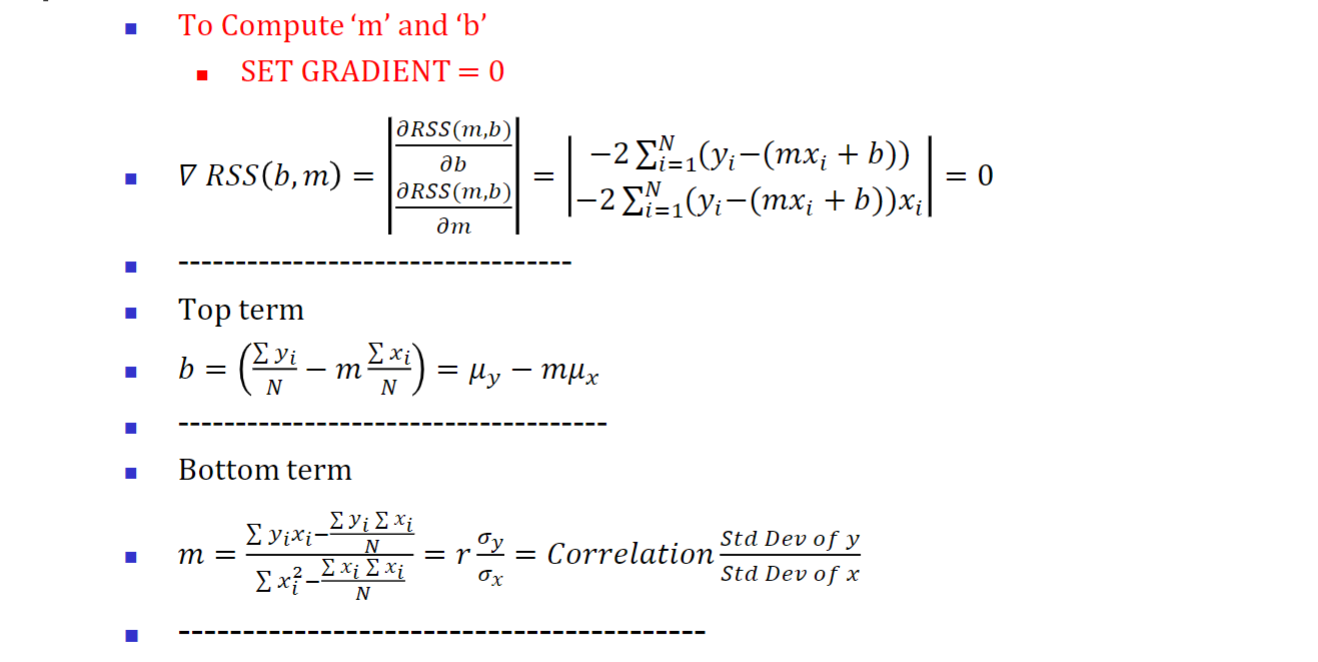

- To find the minimum point of this function, we will take the partial derivative of RSS with respect to ‘m’ and ‘b’ and set that to zero.

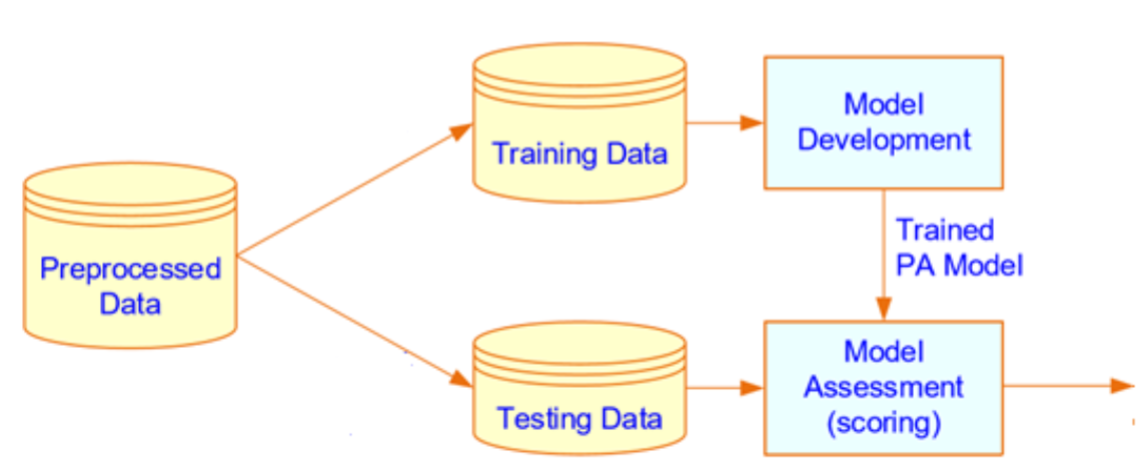

3 Regression: Supervised Learning Method

Single split model assessment methodology

- The model is tested on hold-out sample

- Only the hold-out sample accuracy is reported

4 Nearest Neighbor Regression

A method for predicting a numerical variable , given a value of :

- /Identify the group of points for which the values of are close to the given value

- The prediction is the average of the values for the group

Graph of Averages

- For each value of , the predicted value of is the average of the values of the nearest neighbors.

- Graph these predictions for all the values of , That’s the graph of average

- If the association between the two variables is linear, then points on the graph of averages tend to fall on near a straight line. That’s the regression line.

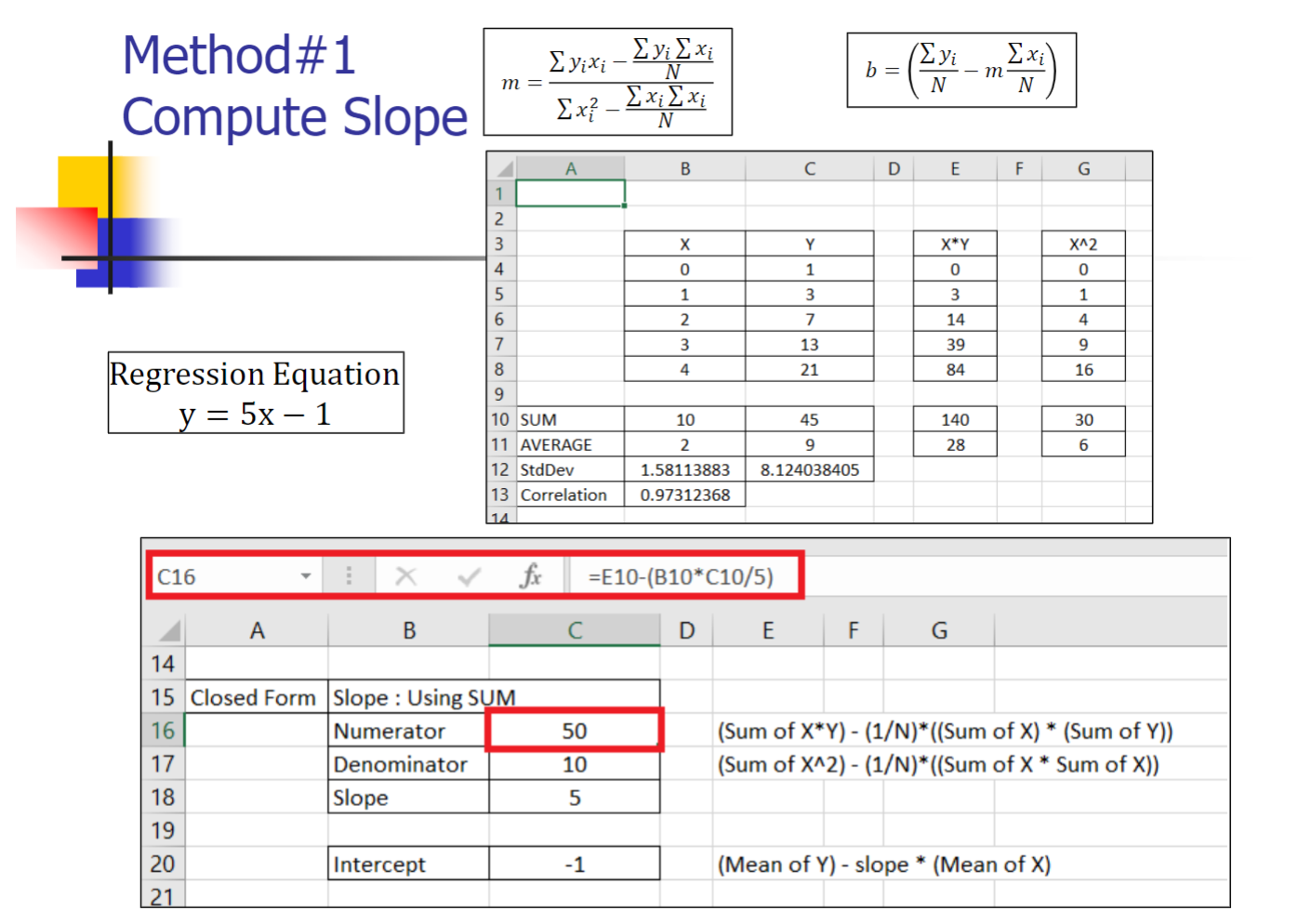

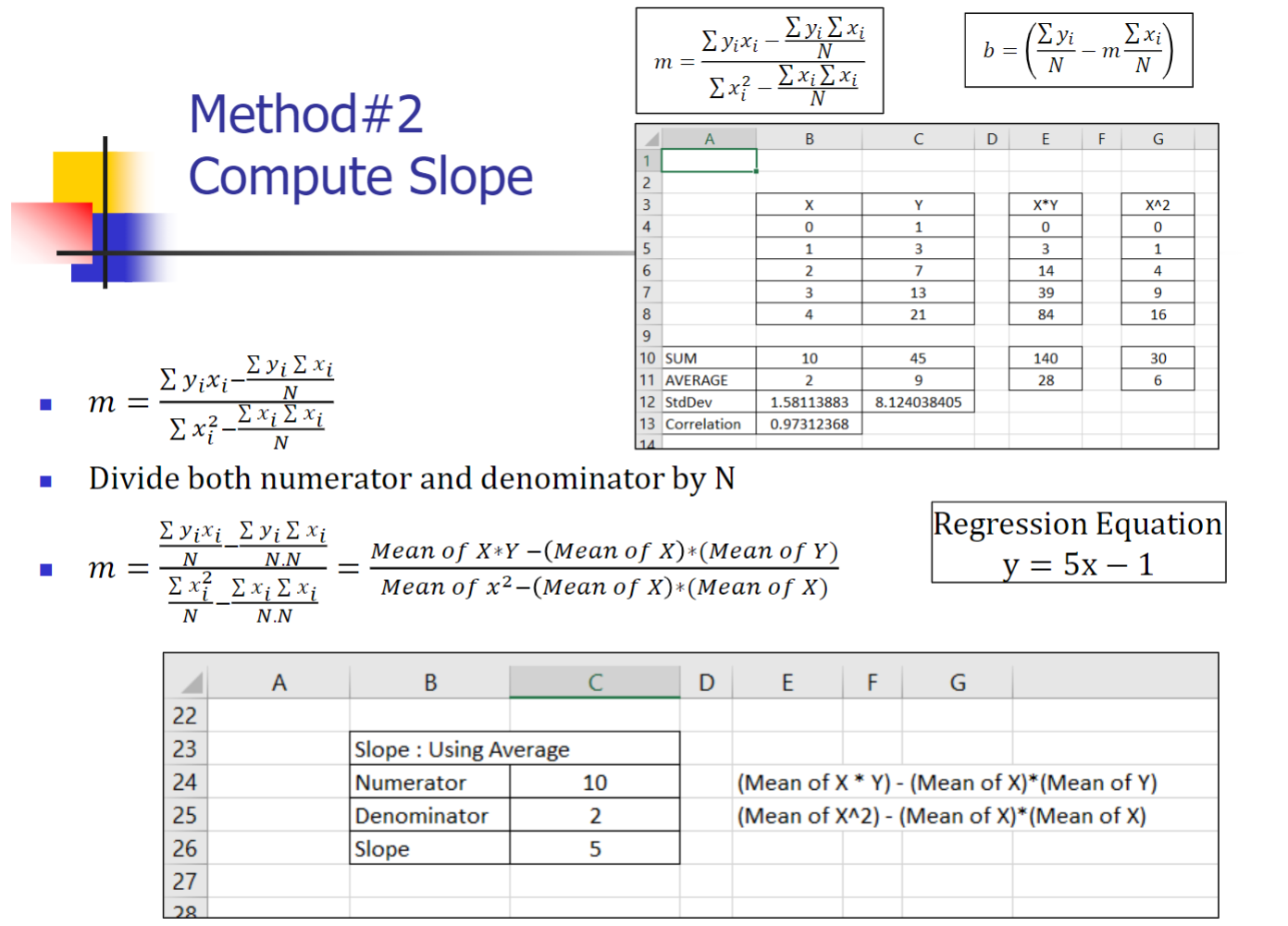

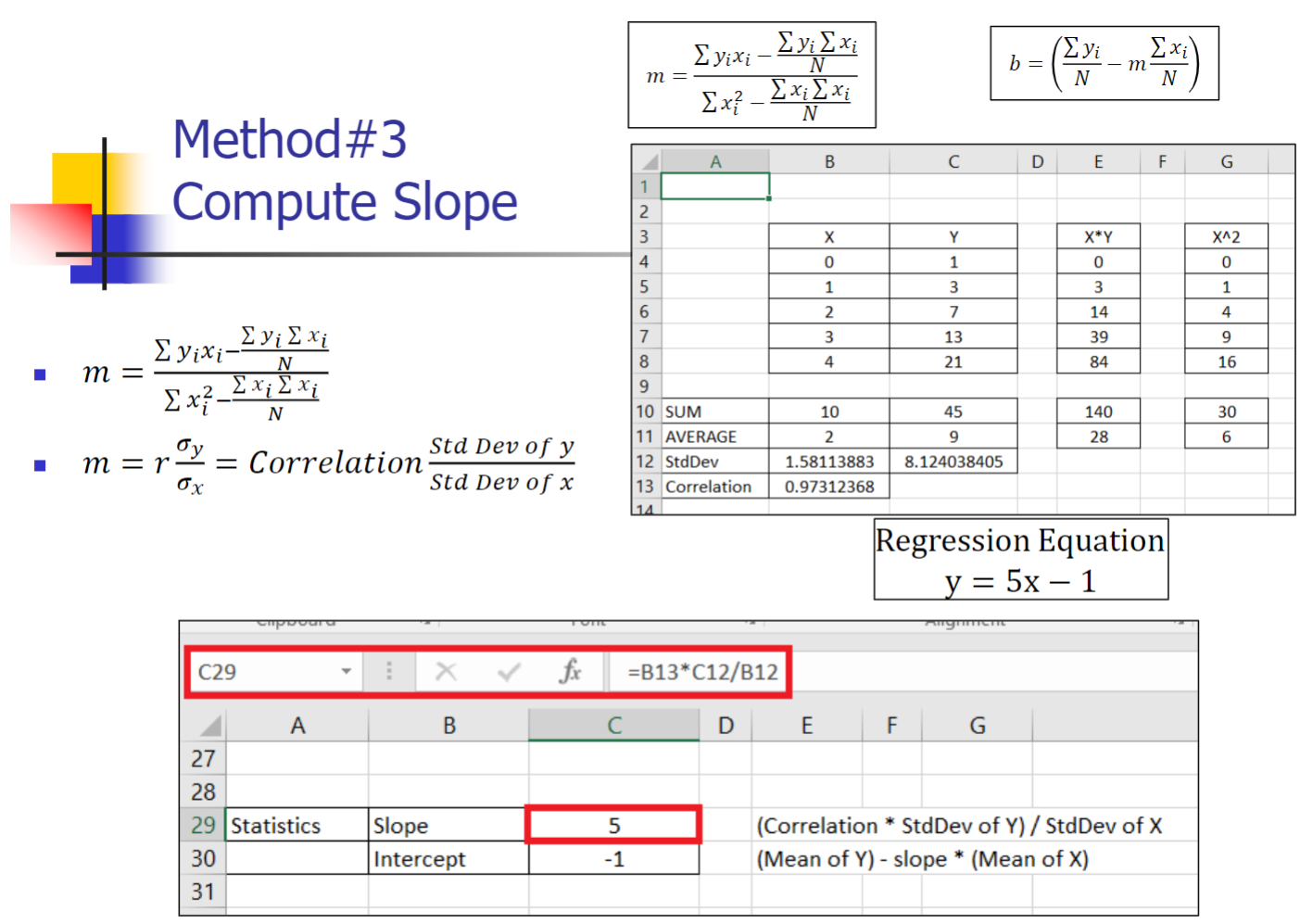

5 Solution for Regression Line

- Method 1